Prometheus部署及使用

Prometheus部署及使用

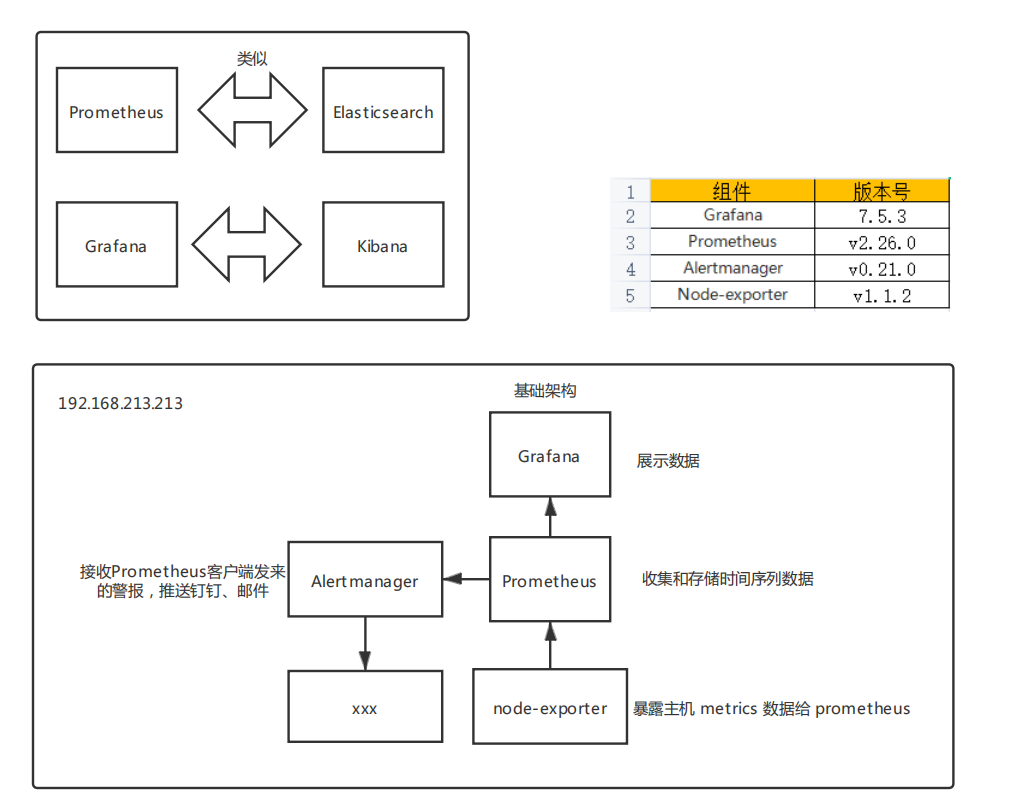

# 基础架构

虚拟机IP:192.168.213.213,本次部署暂不对Alertmanager和dingtalk进行告警测试

[ ]

]

# 启动基础架构

# 上传部署包,解压赋权

部署包

链接:https://pan.baidu.com/s/14-zsMG0NFPoeetqfWU2C0g

提取码:4al9

2

网上下载的部署包

链接:https://pan.baidu.com/s/17okgNvIhXx8Sjet12RCUgw

提取码:dw7j

2

上传部署包之后创建文件夹并赋权

mkdir -p /home/prom/prometheus/data

mkdir -p /home/prom/grafana

chmod 777 /home/prom/prometheus/data

chmod 777 /home/prom/grafana

2

3

4

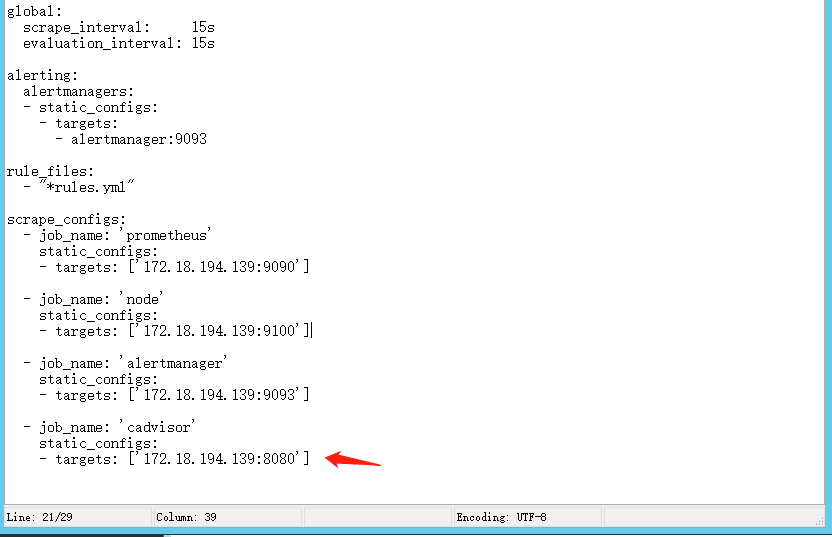

# 修改配置文件

prometheus.yml,将对应改为IP地址

# 启动

进入到docker-compose.yml目录地址

cd /data/prometheus

docker-compose up -d

2

# 访问

访问Prometheus: http://IP:9090/targets

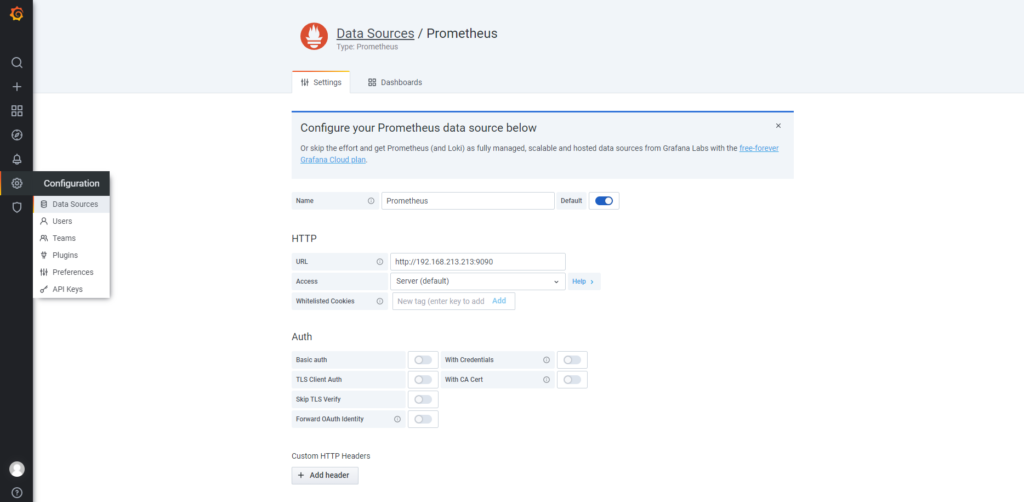

# 添加数据源

访问Grafana:http://IP:3000 账号密码admin

添加数据源:

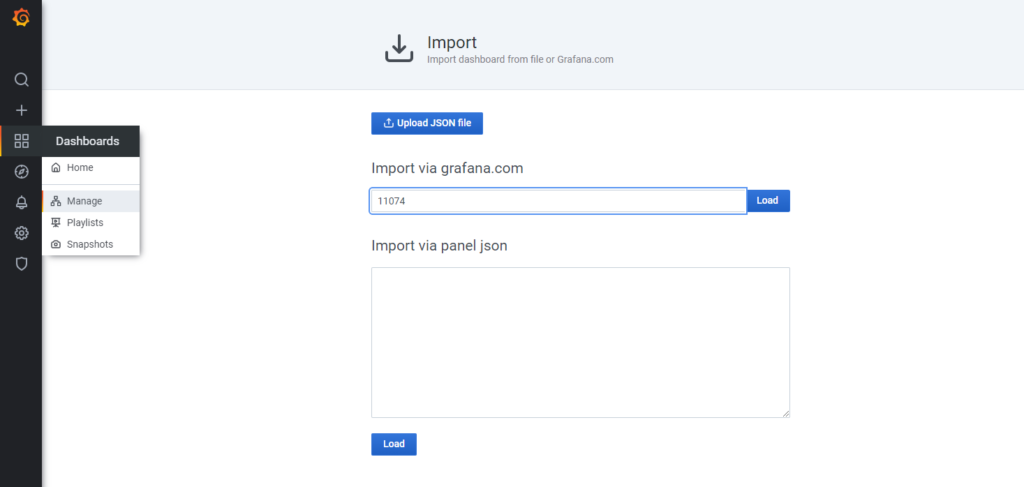

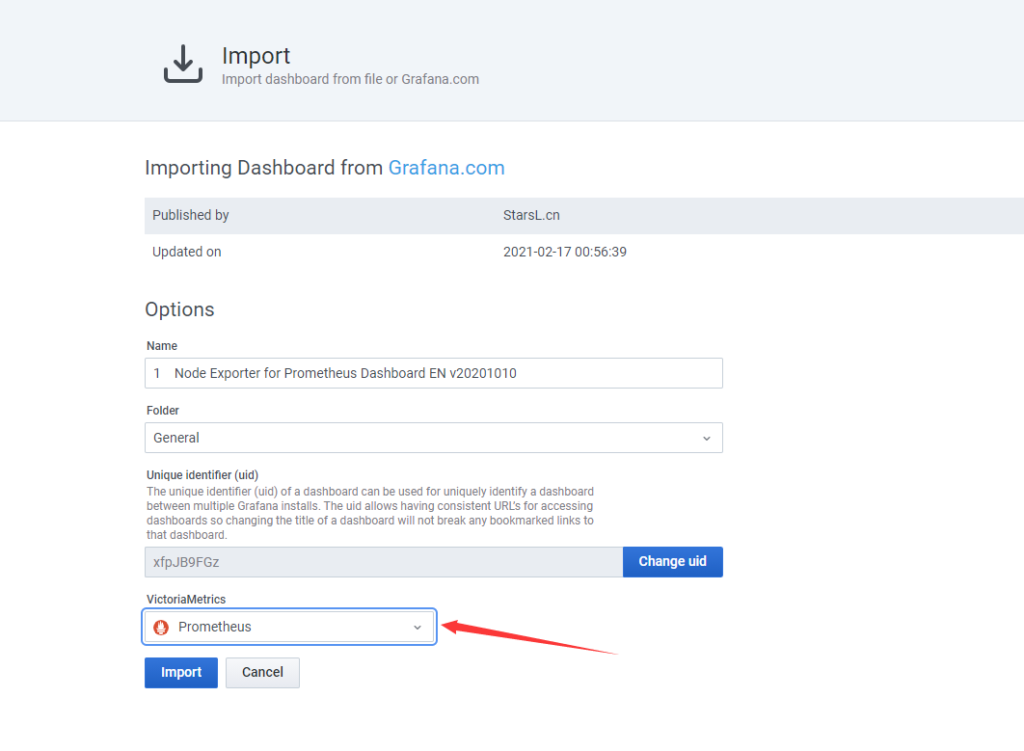

# 引入面板

访问Grafana,ID:11074

或中文:12633

选择数据源,导入

# 效果

中英文效果图

链接:https://pan.baidu.com/s/1X6MrwtjNaymdn4VpkFr9Zg

提取码:6v71

2

# 基础+Mysql+Redis

# 架构图

# Mysql和Redis部署包

部署包

链接:https://pan.baidu.com/s/12Rtcl5R7d3HgusjRLiYpNg

提取码:j0py

2

对这两个文件赋权777

# 监听Mysql

# 创建Mysql监听用户

账号:'exporter' 密码:'xxxxxxx' 最大连接数:3

CREATE USER 'exporter'@'%' IDENTIFIED BY 'xxxxxxx' WITH MAX_USER_CONNECTIONS 3;

GRANT PROCESS, REPLICATION CLIENT ON *.* TO 'exporter'@'%';

GRANT SELECT ON performance_schema.* TO 'exporter'@'%';

FLUSH PRIVILEGES;

2

3

4

# 启动mysqld exporter

docker-compose.yml

version: '3.1'

services:

mysql_exporter:

image: prom/mysqld-exporter:v0.12.1

restart: always

container_name: mysql_exporter

ports:

- "9104:9104"

environment:

- DATA_SOURCE_NAME=exporter:xxxxxxx@(192.168.213.212:3306)/

2

3

4

5

6

7

8

9

10

11

或者直接命令启动:

docker run -d -p 9104:9104 -e DATA_SOURCE_NAME="exporter:xxxxxxx@(192.168.213.212:3306)/" prom/mysqld-exporter:v0.12.1

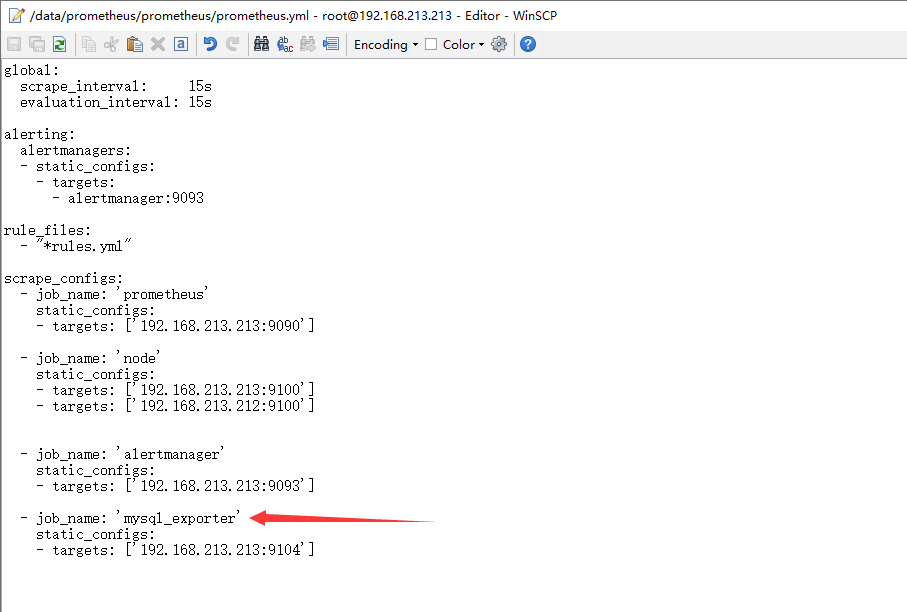

# 修改配置文件并重启

prometheus.yml,监控exporter在213中

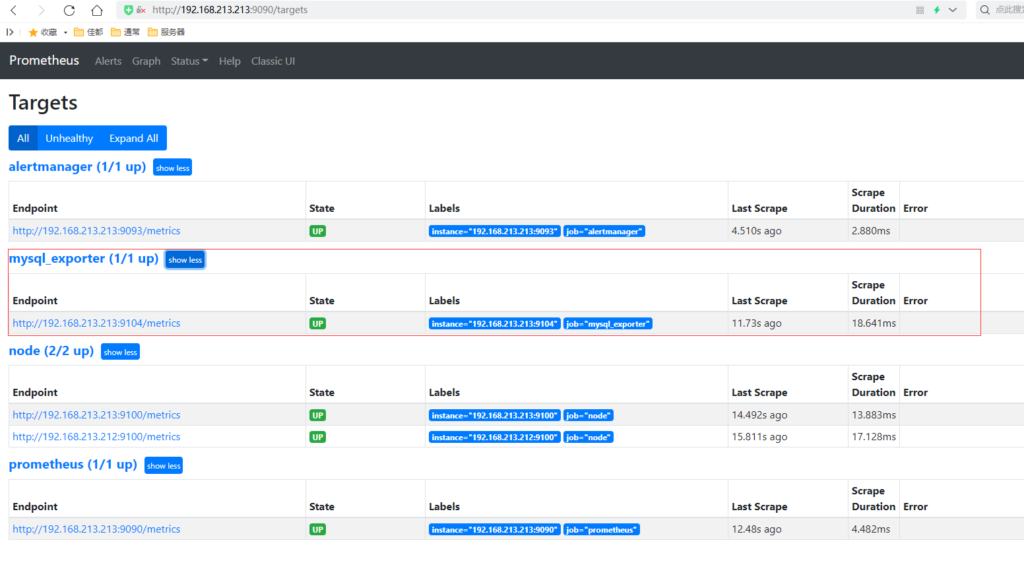

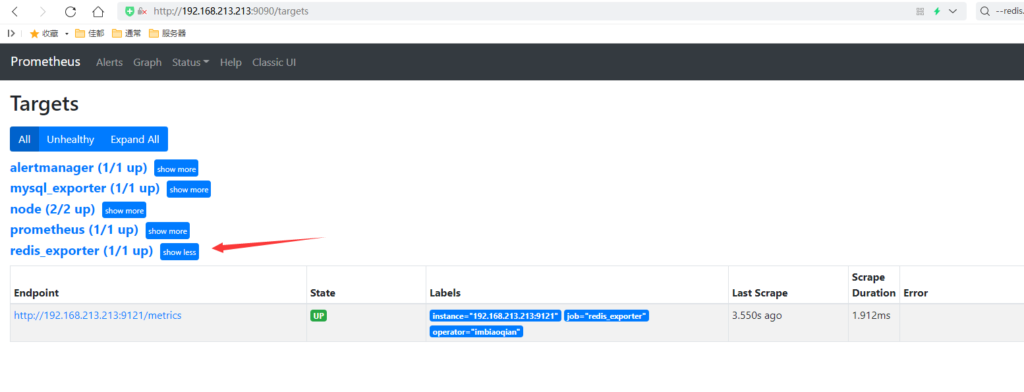

# 查看状态

访问Prometheus

# 引入面板

访问Grafana

mysql-overview_rev5.json

链接:https://pan.baidu.com/s/18nH9oiWRv06KBQImn6MjOA

提取码:xm2v

2

# 效果

中英效果图

链接:https://pan.baidu.com/s/1FzWnf4tr6nQN8fGBkVRjuA

提取码:x0bt

2

# 监听Redis

# redis账号信息

使用上面部署包,密码为:redis5268

# 启动redis-exporter

docker-compose.yml

version: '3.1'

services:

redis_exporter:

image: oliver006/redis_exporter:v1.22.0

restart: always

container_name: redis_exporter

ports:

- "9121:9121"

command:

# 执行的命令

--redis.addr redis://192.168.213.212:6379 --redis.password 'redis5268'

2

3

4

5

6

7

8

9

10

11

12

或者直接命令启动:

docker run -d --name redis_exporter -p 9121:9121 oliver006/redis_exporter:v1.22.0 --redis.addr redis://192.168.213.212:6379 --redis.password 'redis5268'

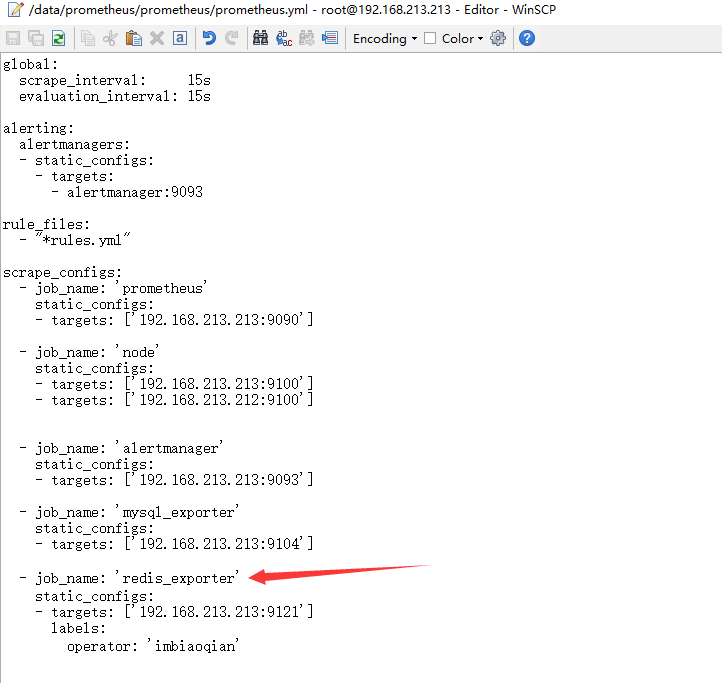

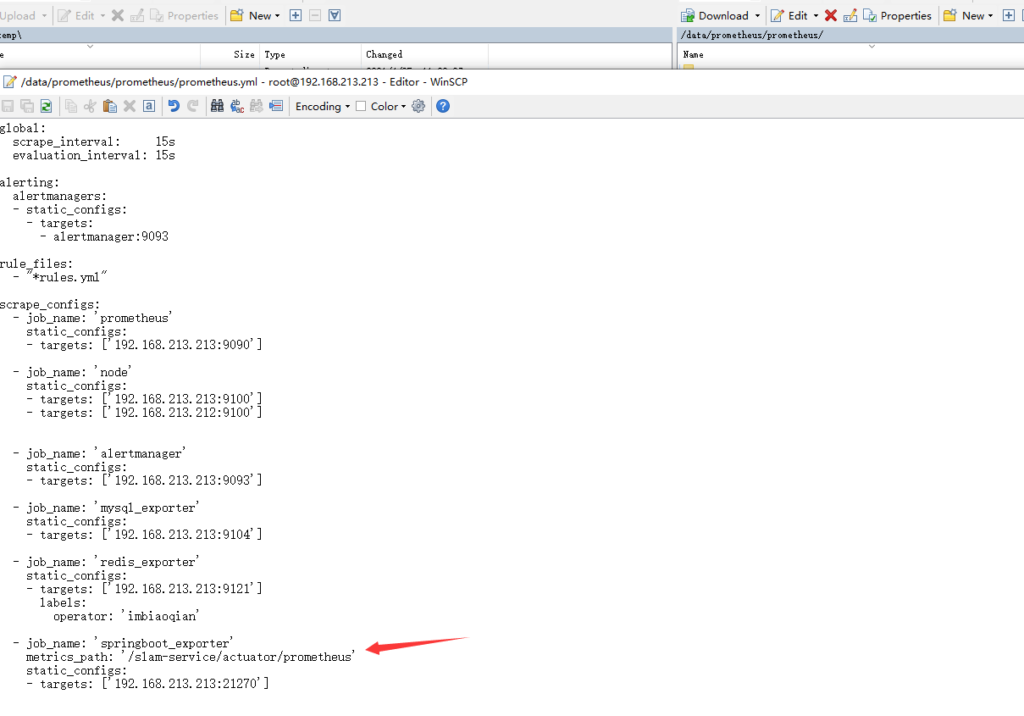

# 修改配置文件并重启

prometheus.yml,监控exporter在213中

# 查看状态

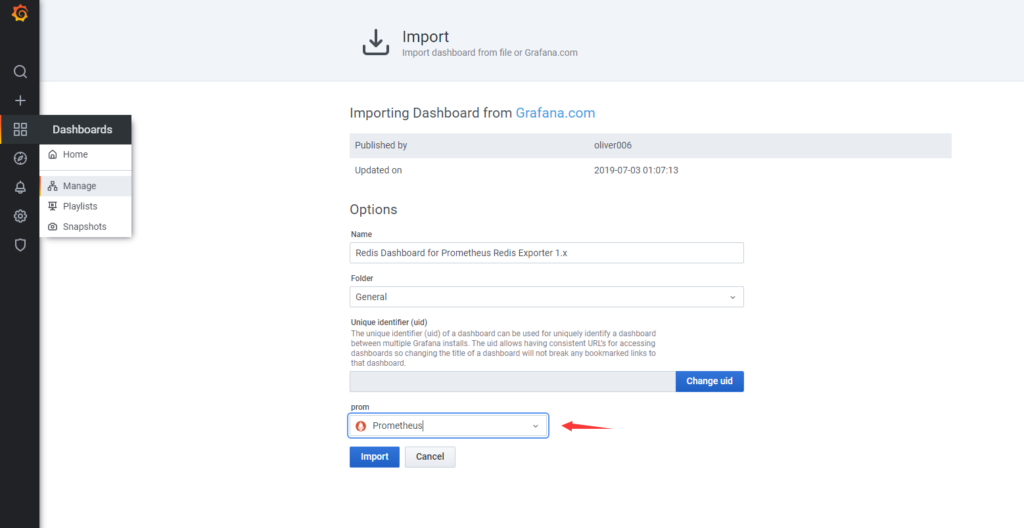

# 引入面板

访问Grafana,ID:763,选择数据源导入

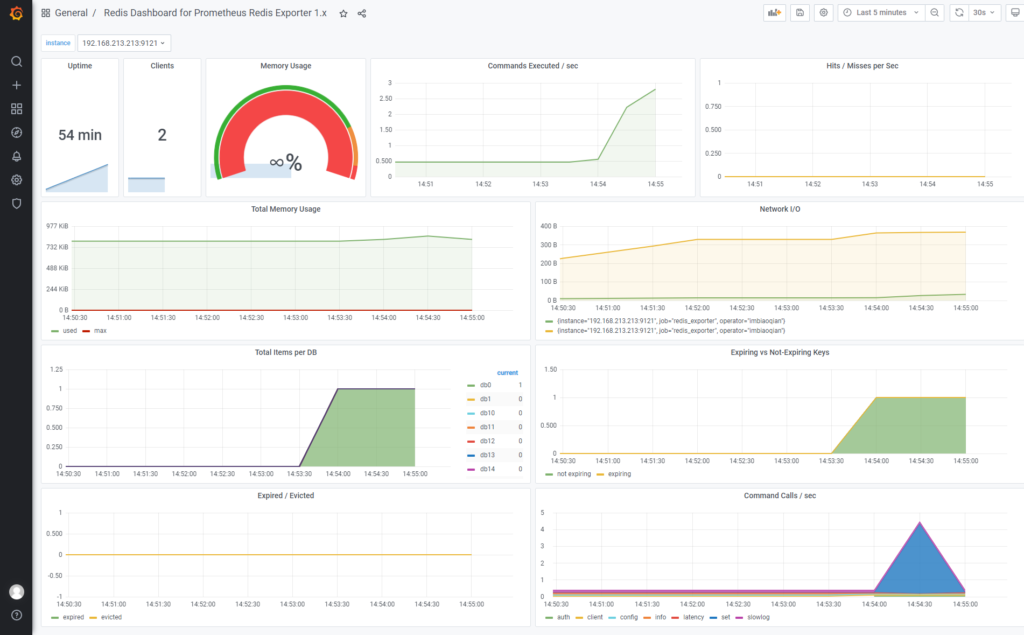

# 效果

# 附

Mysql循环插入数值语句,sdd表,sd字段,id字段值自增

delimiter $$

create procedure pre7()

begin

declare i int;

set i=1;

while i<100 do

insert into sdd (sd)

values(i);

set i=i+1;

end while;

end

$$

call pre7();

2

3

4

5

6

7

8

9

10

11

12

13

14

# Springboot

参考文章:https://zhuanlan.zhihu.com/p/366323778

# 依赖配置

我们演示的SpringBoot为2.0+,因此直接选择 io.micrometer 的依赖包来实现;更低版本的不能使用这种姿势,可以直接使用官方提供的client来实现;这里不进行扩展

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>

2

3

4

# 配置文件

增加以下参数

management:

endpoints:

web:

exposure:

include: "*"

metrics:

tags:

application: ${spring.application.name}

2

3

4

5

6

7

8

# 注意

- management.endpoints.web.exposure.include 这里指定所有的web接口都会上报

- metrics.tags.application 这个应用所有上报的metrics 都会带上 application 这个标签

上面配置完毕之后,会提供一个 /actuator/prometheus 的端点,供prometheus来拉取Metrics信息

工程日志:“EndpointLinksResolver - Exposing 22 endpoint(s) beneath base path '/slam-service/actuator'” slam-service为工程名称

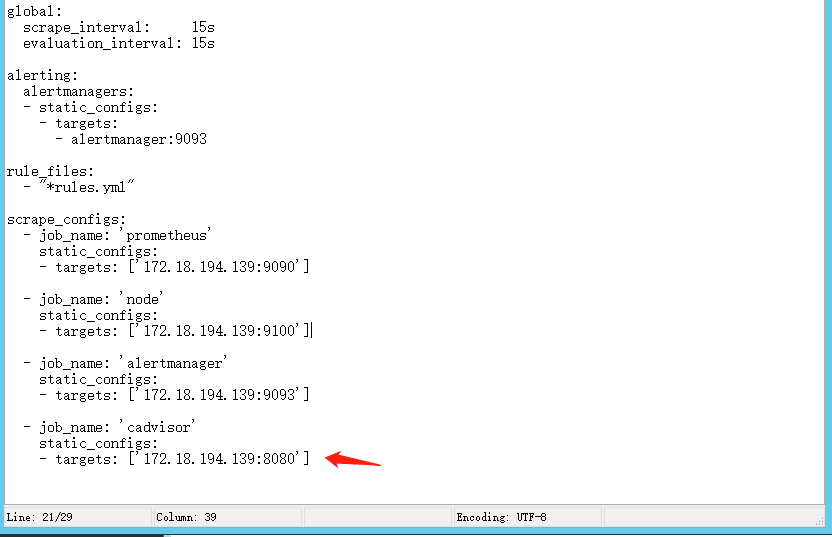

# 修改配置文件并重启

prometheus.yml

prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

- alertmanager:9093

rule_files:

- "*rules.yml"

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['192.168.213.213:9090']

- job_name: 'node'

static_configs:

- targets: ['192.168.213.213:9100']

- targets: ['192.168.213.212:9100']

- job_name: 'alertmanager'

static_configs:

- targets: ['192.168.213.213:9093']

- job_name: 'mysql_exporter'

static_configs:

- targets: ['192.168.213.213:9104']

- job_name: 'redis_exporter'

static_configs:

- targets: ['192.168.213.213:9121']

labels:

operator: 'imbiaoqian'

- job_name: 'springboot_exporter'

metrics_path: '/slam-service/actuator/prometheus'

static_configs:

- targets: ['192.168.213.213:21270']

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

# 查看状态

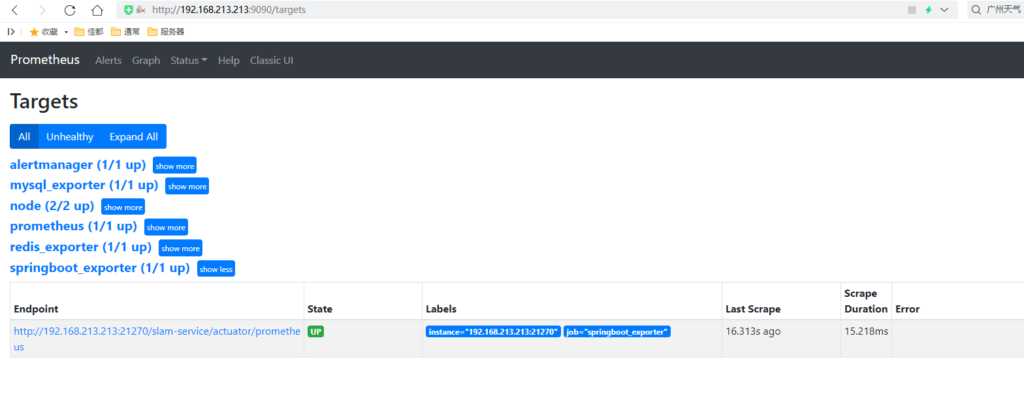

访问Prometheus

# 引入面板

访问Grafana,ID:12856

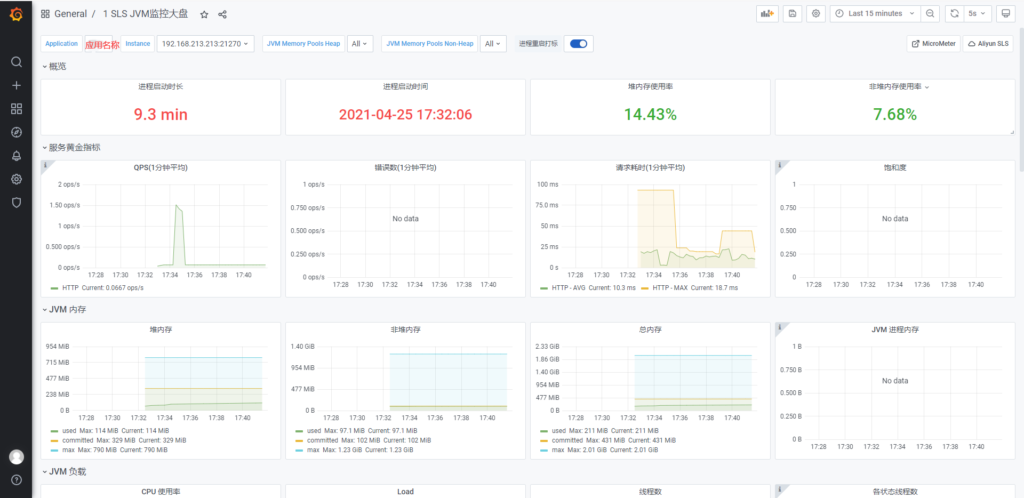

# 效果

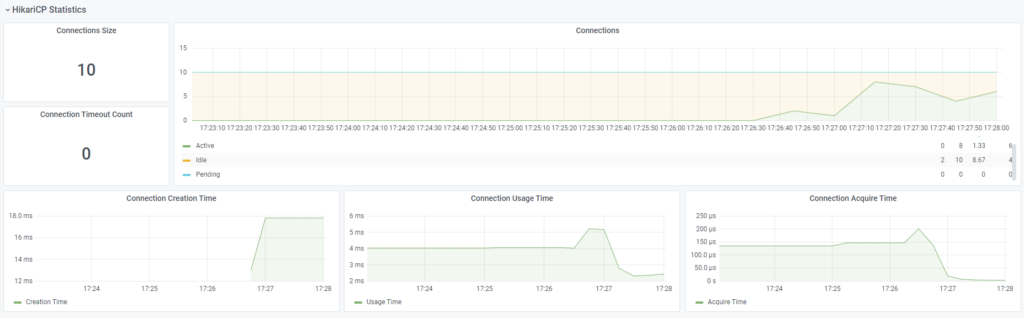

# springboot-数据库连接池

# 起因

在使用Grafana,ID:6756的时候发现页面均无数据

排查后发现有两点原因

1.变量与springboot上报数据不对应,需在Grafana设置中修改变量查询条件

【instance】label_values(jvm_classes_loaded_classes, instance)

【application】label_values(jvm_classes_loaded_classes{instance="$instance"}, application)

2

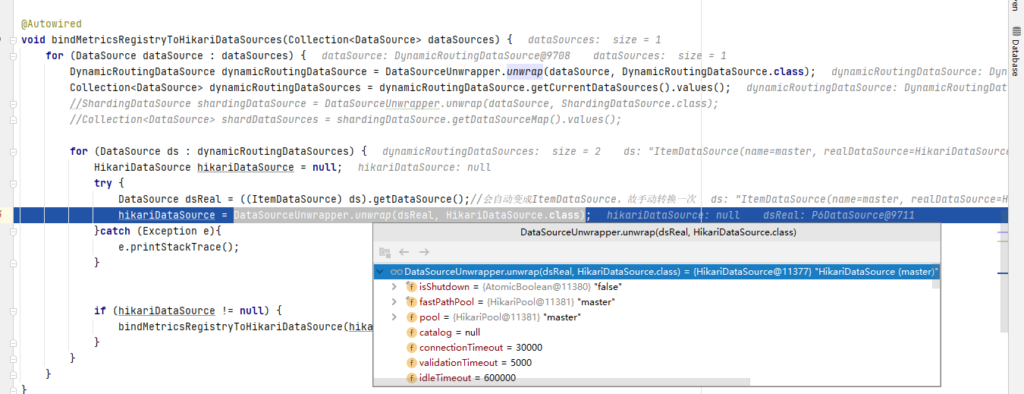

2.页面中没有HikariCP Statistics数值,springboot上报数据也无相关信息。此内容在网上较难查询到,在经过合理推断和部分文章参考得到解决办法

# 解决

# 说明

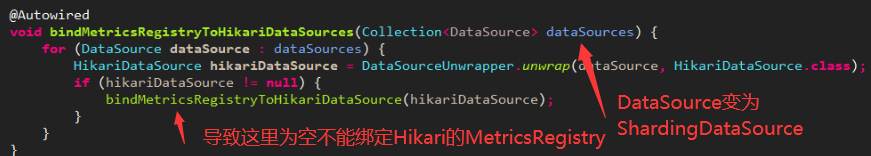

可参考此篇文章:https://blog.csdn.net/ankeway/article/details/108003149

但文章中示例是使用ShardingJdbc,下文使用的是MybatisPlus中的dynamic-datasource-spring-boot-starter多数据源配置,通过断点bindMetricsRegistryToHikariDataSources入口参数中分析推理,本次数据源采用的是DynamicRoutingDataSource。

数据源依赖为

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>dynamic-datasource-spring-boot-starter</artifactId>

</dependency>

2

3

4

原因与文章一致,都是因为DataSource变化导致为null

# 解决示例

# 引入依赖

在有数据库链接依赖的pom中增加

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-core</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

2

3

4

5

6

7

8

# 增加配置文件

新建文件PoolMetricsAutoConfiguration

import com.baomidou.dynamic.datasource.DynamicRoutingDataSource;

import com.baomidou.dynamic.datasource.ds.ItemDataSource;

import com.zaxxer.hikari.HikariDataSource;

import com.zaxxer.hikari.metrics.micrometer.MicrometerMetricsTrackerFactory;

import io.micrometer.core.instrument.MeterRegistry;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.actuate.autoconfigure.metrics.MetricsAutoConfiguration;

import org.springframework.boot.actuate.autoconfigure.metrics.export.simple.SimpleMetricsExportAutoConfiguration;

import org.springframework.boot.autoconfigure.AutoConfigureAfter;

import org.springframework.boot.autoconfigure.condition.ConditionalOnBean;

import org.springframework.boot.autoconfigure.condition.ConditionalOnClass;

import org.springframework.boot.autoconfigure.jdbc.DataSourceAutoConfiguration;

import org.springframework.boot.jdbc.DataSourceUnwrapper;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.log.LogMessage;

import javax.sql.DataSource;

import java.util.Collection;

@Configuration(proxyBeanMethods = false)

@AutoConfigureAfter({MetricsAutoConfiguration.class, DataSourceAutoConfiguration.class,

SimpleMetricsExportAutoConfiguration.class})

@ConditionalOnClass({DataSource.class, MeterRegistry.class})

@ConditionalOnBean({DataSource.class, MeterRegistry.class})

public class PoolMetricsAutoConfiguration {

@Configuration(proxyBeanMethods = false)

@ConditionalOnClass({DynamicRoutingDataSource.class, HikariDataSource.class})

static class DataSourceMetricsConfiguration {

private static final Log logger = LogFactory.getLog(DataSourceMetricsConfiguration.class);

private final MeterRegistry registry;

DataSourceMetricsConfiguration(MeterRegistry registry) {

this.registry = registry;

}

@Autowired

void bindMetricsRegistryToHikariDataSources(Collection<DataSource> dataSources) {

for (DataSource dataSource : dataSources) {

DynamicRoutingDataSource dynamicRoutingDataSource = DataSourceUnwrapper.unwrap(dataSource, DynamicRoutingDataSource.class);

Collection<DataSource> dynamicRoutingDataSources = dynamicRoutingDataSource.getCurrentDataSources().values();

for (DataSource ds : dynamicRoutingDataSources) {

HikariDataSource hikariDataSource = null;

try {

// DataSource dsReal = ((ItemDataSource) ds).getDataSource();//会自动变成ItemDataSource,故手动转换一次

// hikariDataSource = DataSourceUnwrapper.unwrap(dsReal, HikariDataSource.class);

ItemDataSource itemDataSource = ds.unwrap(ItemDataSource.class);//会自动变成ItemDataSource,故手动转换一次

if (itemDataSource!=null){

DataSource dsReal = itemDataSource.getDataSource();

hikariDataSource = DataSourceUnwrapper.unwrap(dsReal, HikariDataSource.class);

}

} catch (Exception e) {

e.printStackTrace();

}

if (hikariDataSource != null) {

bindMetricsRegistryToHikariDataSource(hikariDataSource);

}

}

}

}

private void bindMetricsRegistryToHikariDataSource(HikariDataSource hikari) {

if (hikari.getMetricRegistry() == null && hikari.getMetricsTrackerFactory() == null) {

try {

hikari.setMetricsTrackerFactory(new MicrometerMetricsTrackerFactory(this.registry));

} catch (Exception ex) {

logger.warn(LogMessage.format("Failed to bind Hikari metrics: %s", ex.getMessage()));

}

}

}

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

# 效果

只要解析出hikariDataSource然后分别绑定进去,可以分别获取到监控数据了。

在测试的时候发现dynamicRoutingDataSource获取到的都是ItemDataSource而不是DataSource,若没有强制转换直接DataSourceUnwrapper.unwrap还是为null的状态

再次访问/actuator/prometheus,即可看到出现了设置的2个数据源连接池的监控数据

页面

# 参考博客原文

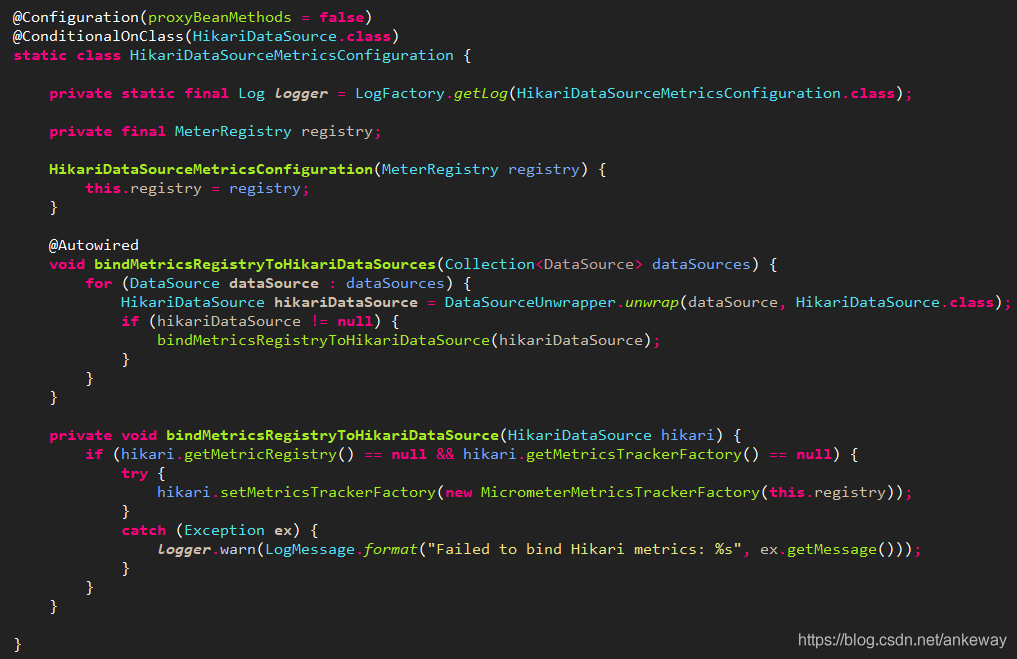

SpringBoot2.X版本后使用Hikari作为数据库的默认的连接池。

Spring.datasource的默认配置中使用了自动配置的方式来绑定MetricsRegistry,

在spring-boot-actuator-autoconfigure包中org.springframework.boot.actuate.autoconfigure.metrics.jdbc.DataSourcePoolMetricsAutoConfiguration类中默认包含了有关于HikariDataSoucre的Metrics监控的绑定逻辑

而HikariCP也提供了有关使用Prometheus监控的具体实现

当使用使用spring.datasource的基本配置时,springboot的自动配置和hikari中的监控逻辑二者结合后,有关prometheus的metrice监控数据就会呈现出来

application.properties中配置

application.properties中配置

spring:

datasource:

driver-class-name: com.mysql.cj.jdbc.Driver

type: com.zaxxer.hikari.HikariDataSource

url: ${url}

username: ${username}

password: ${password}

hikari:

pool-name: HikariPool-1

minimum-idle: 10

maximum-pool-size: 20

idle-timeout: 500000

max-lifetime: 540000

connection-timeout: 60000

connection-test-query: SELECT 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

当我们开启了prometheus的端点监控后http://IP:PORT/actuator/prometheus便可以查看到关于hikaricp相关的监控数据,同时利用prometheus实时抓取监控数据用于图标呈现

# HELP hikaricp_connections_max Max connections

# TYPE hikaricp_connections_max gauge

hikaricp_connections_max{pool="HikariPool-1",} 50.0

# HELP hikaricp_connections_pending Pending threads

# TYPE hikaricp_connections_pending gauge

hikaricp_connections_pending{pool="HikariPool-1",} 0.0

# HELP hikaricp_connections_timeout_total Connection timeout total count

# TYPE hikaricp_connections_timeout_total counter

hikaricp_connections_timeout_total{pool="HikariPool-1",} 0.0

# HELP hikaricp_connections_acquire_seconds Connection acquire time

# TYPE hikaricp_connections_acquire_seconds summary

hikaricp_connections_acquire_seconds_count{pool="HikariPool-1",} 1.0

hikaricp_connections_acquire_seconds_sum{pool="HikariPool-1",} 7.39E-5

# HELP hikaricp_connections_acquire_seconds_max Connection acquire time

# TYPE hikaricp_connections_acquire_seconds_max gauge

hikaricp_connections_acquire_seconds_max{pool="HikariPool-1",} 7.39E-5

# HELP hikaricp_connections_min Min connections

# TYPE hikaricp_connections_min gauge

hikaricp_connections_min{pool="HikariPool-1",} 3.0

# HELP hikaricp_connections_usage_seconds Connection usage time

# TYPE hikaricp_connections_usage_seconds summary

hikaricp_connections_usage_seconds_count{pool="HikariPool-1",} 1.0

hikaricp_connections_usage_seconds_sum{pool="HikariPool-1",} 0.025

# HELP hikaricp_connections_usage_seconds_max Connection usage time

# TYPE hikaricp_connections_usage_seconds_max gauge

hikaricp_connections_usage_seconds_max{pool="HikariPool-1",} 0.025

# HELP hikaricp_connections_creation_seconds_max Connection creation time

# TYPE hikaricp_connections_creation_seconds_max gauge

hikaricp_connections_creation_seconds_max{pool="HikariPool-1",} 0.094

# HELP hikaricp_connections_creation_seconds Connection creation time

# TYPE hikaricp_connections_creation_seconds summary

hikaricp_connections_creation_seconds_count{pool="HikariPool-1",} 2.0

hikaricp_connections_creation_seconds_sum{pool="HikariPool-1",} 0.178

# HELP hikaricp_connections_active Active connections

# TYPE hikaricp_connections_active gauge

hikaricp_connections_active{pool="HikariPool-1",} 0.0

# HELP hikaricp_connections Total connections

# TYPE hikaricp_connections gauge

hikaricp_connections{pool="HikariPool-1",} 3.0

# HELP hikaricp_connections_idle Idle connections

# TYPE hikaricp_connections_idle gauge

hikaricp_connections_idle{pool="HikariPool-1",} 3.0

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

但是,当我们使用了ShardingSphere作为分库分表中间件来使用以后,因为由于自动配置中使用的DataSource由HikariDataSource变为了ShardingDataSource,所以无法完成对HikariMetricsRegistry的绑定操作,因此使用了Sharding后无法再从/actuator/prometheus中看到关于hikaricp有关的数据。

那么如何解决这个问题呢,我们可以模仿这个DataSourcePoolMetricsAutoConfiguration的逻辑,为Sharding内的dataSources进行循环绑定。

我们知道,Sharding的自动配置逻辑中,org.apache.shardingsphere.shardingjdbc.spring.boot.SpringBootConfiguration,将Sharding配置的多数据库连接放到了一个dataSourceMap中。因此我们绑定MetricsRegistry时也就是需要获取Sharing中的dataSourceMap中的数据来分别注册。通常我们会如下配置shardingsphere定义2个数据源.

application.properties中配置

application.properties中配置

spring:

shardingsphere:

datasource:

names: ds0, ds1

ds0:

type: com.zaxxer.hikari.HikariDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

jdbcUrl: ${url}

username: ${username}

password: ${password}

pool-name: HikariPool-1

minimum-idle: 10

maximum-pool-size: 20

idle-timeout: 500000

max-lifetime: 540000

connection-timeout: 60000

connection-test-query: SELECT 1

ds1:

type: com.zaxxer.hikari.HikariDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

jdbcUrl: ${url}

username: ${username}

password: ${password}

pool-name: HikariPool-2

minimum-idle: 10

maximum-pool-size: 20

idle-timeout: 500000

max-lifetime: 540000

connection-timeout: 60000

connection-test-query: SELECT 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

同时我们模仿DataSourceMetricsConfiguration,编写一个ShardingDataSourceMetricsConfiguration,源码如下

import java.util.Collection;

import javax.sql.DataSource;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.apache.shardingsphere.shardingjdbc.jdbc.core.datasource.ShardingDataSource;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.actuate.autoconfigure.metrics.MetricsAutoConfiguration;

import org.springframework.boot.actuate.autoconfigure.metrics.export.simple.SimpleMetricsExportAutoConfiguration;

import org.springframework.boot.autoconfigure.AutoConfigureAfter;

import org.springframework.boot.autoconfigure.condition.ConditionalOnBean;

import org.springframework.boot.autoconfigure.condition.ConditionalOnClass;

import org.springframework.boot.autoconfigure.jdbc.DataSourceAutoConfiguration;

import org.springframework.boot.jdbc.DataSourceUnwrapper;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.log.LogMessage;

import com.zaxxer.hikari.HikariDataSource;

import com.zaxxer.hikari.metrics.micrometer.MicrometerMetricsTrackerFactory;

import io.micrometer.core.instrument.MeterRegistry;

@Configuration(proxyBeanMethods = false)

@AutoConfigureAfter({ MetricsAutoConfiguration.class, DataSourceAutoConfiguration.class,

SimpleMetricsExportAutoConfiguration.class })

@ConditionalOnClass({ DataSource.class, MeterRegistry.class })

@ConditionalOnBean({ DataSource.class, MeterRegistry.class })

public class ShardingDataSourcePoolMetricsAutoConfiguration {

@Configuration(proxyBeanMethods = false)

@ConditionalOnClass({ShardingDataSource.class, HikariDataSource.class})

static class ShardingDataSourceMetricsConfiguration {

private static final Log logger = LogFactory.getLog(ShardingDataSourceMetricsConfiguration.class);

private final MeterRegistry registry;

ShardingDataSourceMetricsConfiguration(MeterRegistry registry) {

this.registry = registry;

}

@Autowired

void bindMetricsRegistryToHikariDataSources(Collection<DataSource> dataSources) {

for (DataSource dataSource : dataSources) {

ShardingDataSource shardingDataSource = DataSourceUnwrapper.unwrap(dataSource, ShardingDataSource.class);

Collection<DataSource> shardDataSources = shardingDataSource.getDataSourceMap().values();

for (DataSource ds : shardDataSources) {

HikariDataSource hikariDataSource = DataSourceUnwrapper.unwrap(ds, HikariDataSource.class);

if (hikariDataSource != null) {

bindMetricsRegistryToHikariDataSource(hikariDataSource);

}

}

}

}

private void bindMetricsRegistryToHikariDataSource(HikariDataSource hikari) {

if (hikari.getMetricRegistry() == null && hikari.getMetricsTrackerFactory() == null) {

try {

hikari.setMetricsTrackerFactory(new MicrometerMetricsTrackerFactory(this.registry));

}

catch (Exception ex) {

logger.warn(LogMessage.format("Failed to bind Hikari metrics: %s", ex.getMessage()));

}

}

}

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

重点就是在这里,我们知道ShardingDataSource中包含了具体数据源连接池,只要解析出hikariDataSource然后分别绑定进去,可以分别获取到监控数据了。

当我们再次访问http://IP:PORT/actuator/prometheus,我们就可以看到出现了设置的2个数据源连接池的监控数据。

附带指标监控解释

指标1:hikaricp_pending_threads

hikaricp_pending_threads 表示当前排队获取连接的线程数,Guage类型。该指标持续飙高,说明DB连接池中基本已无空闲连接。

指标2:hikaricp_connection_acquired_nanos

hikaricp_connection_acquired_nanos表示连接获取的等待时间,一般取99位数,Summary类型

指标3:hikaricp_idle_connections

hikaricp_idle_connections表示当前空闲连接数,Gauge类型。HikariCP是可以配置最小空闲连接数的,当此指标长期比较高(等于最大连接数)时,可以适当减小配置项中最小连接数。

指标4:hikaricp_active_connections

hikaricp_active_connections表示当前正在使用的连接数,Gauge类型。如果此指标长期在设置的最大连接数上下波动时,或者长期保持在最大线程数时,可以考虑增大最大连接数。

指标5:hikaricp_connection_usage_millis

hikaricp_connection_usage_millis表示连接被复用的间隔时长,一般取99位数,Summary类型。该配置的意义在于表明连接池中的一个连接从被返回连接池到再被复用的时间间隔,对于使用较少的数据源,此指标可能会达到秒级,可以结合流量高峰期的此项指标与激活连接数指标来确定是否需要减小最小连接数,若高峰也是秒级,说明对比数据源使用不频繁,可考虑减小连接数。

指标6:hikaricp_connection_timeout_total

hikaricp_connection_timeout_total表示每分钟超时连接数,Counter类型。主要用来反映连接池中总共超时的连接数量,此处的超时指的是连接创建超时。经常连接创建超时,一个排查方向是和运维配合检查下网络是否正常。

指标7:hikaricp_connection_creation_millis

hikaricp_connection_creation_millis表示连接创建成功的耗时,一般取99位数,Summary类型。该配置的意义在于表明创建一个连接的耗时,主要反映当前机器到数据库的网络情况,在IDC意义不大,除非是网络抖动或者机房间通讯中断才会有异常波动。

2

3

4

5

6

7

8

9

10

11

12

13

14

# 完整部署包

地址

链接:https://pan.baidu.com/s/1U6tASaxXWXq3B1hBjOg1XA

提取码:2v0q

2

# 修改时区

# prometheus

使用以下Dockerfile生成的镜像,目录结构

./

├── dockerfile

└── prometheus-2.26.0.linux-amd64.tar.gz

2

3

Dockerfile

FROM centos:7

ADD prometheus-2.26.0.linux-amd64.tar.gz /

RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \

mv /prometheus-2.26.0.linux-amd64 /prometheus

WORKDIR /prometheus

ENTRYPOINT [ "/prometheus/prometheus" ]

CMD [ "--config.file=/etc/prometheus/prometheus.yml", \

"--storage.tsdb.path=/prometheus", \

"--web.console.libraries=/usr/share/prometheus/console_libraries", \

"--web.console.templates=/usr/share/prometheus/consoles" ]

2

3

4

5

6

7

8

9

10

11

12

13

文件地址

链接:https://pan.baidu.com/s/19AOpkjEejplz7agsBR0gKg

提取码:x05e

2

构建:

docker build -t prometheus\_sh:v2.26.0 ./

# 新增对Docker监控

# 启动cadvisor

新增或修改docker-compose.yml,启动cadvisor服务

version: '3.7'

services:

cadvisor:

image: google/cadvisor:v0.33.0

container_name: cadvisor

restart: unless-stopped

ports:

- "8080:8080"

volumes:

- "/:/rootfs:ro"

- "/var/run:/var/run:rw"

- "/sys:/sys:ro"

- "/var/lib/docker/:/var/lib/docker:ro"

- "/dev/disk/:/dev/disk:ro"

2

3

4

5

6

7

8

9

10

11

12

13

14

15

修改prometheus.yml,增加job

- job_name: 'cadvisor'

static_configs:

- targets: ['172.18.194.139:8080']

2

3

修改完成后重启监控系统

# 引入面板

893或179